XmlSecureResolver: XXE in .Net

Filed under: Development, Security, Testing

tl;dr

- Microsoft .Net 4.5.2 and above protect against XXE by default.

- It is possible to become vulnerable by explicitly setting a XmlUrlResolver on an XmlDocument.

- A secure alternative is to use the XmlSecureResolver object which can limit allowed domains.

- XmlSecureResolver appeared to work correctly in .Net 4.X, but did not appear to work in .Net 6.

I wrote about XXE in .net a few years ago (https://www.jardinesoftware.net/2016/05/26/xxe-and-net/) and I recently starting doing some more research into how it works with the later versions. At the time, the focus was just on versions prior to 4.5.2 and then those after it. At that time there wasn’t a lot after it. Now we have a few versions that have appeared and I started looking at some examples.

I stumbled across the XmlSecureResolver class. It peaked my curiosity, so I started to figure out how it worked. I started with the following code snippet. Note that this was targeting .Net 6.0.

static void Load()

{

string xml = "<?xml version='1.0' encoding='UTF-8' ?><!DOCTYPE foo [<!ENTITY xxe SYSTEM 'https://labs.developsec.com/test/xxe_test.php'>]><root><doc>&xxe;</doc><foo>Test</foo></root>";

XmlDocument xmlDoc = new XmlDocument();

xmlDoc.XmlResolver = new XmlSecureResolver(new XmlUrlResolver(), "https://www.jardinesoftware.com");

xmlDoc.LoadXml(xml);

Console.WriteLine(xmlDoc.InnerText);

Console.ReadLine();

}So what is the expectation?

- At the top of the code, we are setting some very simple XML

- The XML contains an Entity that is attempting to pull data from the labs.developsec.com domain.

- The xxe_test.php file simply returns “test from the web..”

- Next, we create an XmlDocument to parse the xml variable.

- By Default (after .net 4.5.1), the XMLDocument does not parse DTDs (or external entities).

- To Allow this, we are going to set the XmlResolver. In This case, we are using the XmlSecureResolver assuming it will help limit the entities that are allowed to be parsed.

- I will set the XmlSecureResolver to a new XmlUrlResolver.

- I will also set the permission set to limit entities to the jardinesoftware.com domain.

- Finally, we load the xml and print out the result.

My assumption was that I should get an error because the URL passed into the XmlSecureResolver constructor does not match the URL that is referenced in the xml string’s entity definition.

https://www.jardinesoftware.com != https://labs.developsec.com

Can you guess what result I received?

If you guessed that it worked just fine and the entity was parsed, you guessed correct. But why? The whole point of XMLSecureResolver is to be able to limit this to only entities from the allowed domain.

I went back and forth for a few hours trying different configurations. Accessing different files from different locations. It worked every time. What was going on?

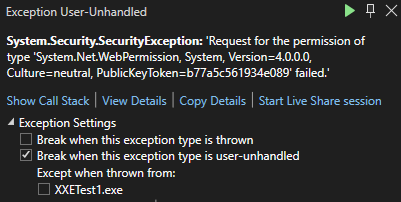

I then decided to switch from my Mac over to my Windows system. I loaded up Visual Studio 2022 and pulled up my old project from the old blog post. The difference? This project was targeting the .Net 4.8 framework. I ran the exact same code in the above snippet and sure enough, I received an error. I did not have permission to access that domain.

Finally, success. But I wasn’t satisfied. Why was it not working on my Mac. So I added the .Net 6 SDK to my Windows system and changed the target. Sure enough, no exception was thrown and everything worked fine. The entity was parsed.

Why is this important?

The risk around parsing XML like this is a vulnerability called XML External Entities (XXE). The short story here is that it allows a malicious user to supply an XML document that references external files and, if parsed, could allow the attacker to read the content of those files. There is more to this vulnerability, but that is the simple overview.

Since .net 4.5.2, the XmlDocument object was protected from this vulnerability by default because it sets the XmlResolver to null. This blocks the parser from parsing any external entities. The concern here is that a user may have decided they needed to allow entities from a specific domain and decided to use the XmlSecureResolver to do it. While that seemed to work in the 4.X versions of .Net, it seems to not work in .Net 6. This can be an issue if you thought you were good and then upgraded and didn’t realize that the functionality changed.

Conclusion

If you are using the XmlSecureResolver within your .Net application, make sure that it is working as you expect. Like many things with Microsoft .Net, everything can change depending on the version you are running. In my test cases, .Net 4.X seemed to work properly with this object. However, .Net 6 didn’t seem to respect the object at all, allowing DTD parsing when it was unexpected.

I did not opt to load every version of .Net to see when this changed. It is just another example where we have to be conscious of the security choices we make. It could very well be this is a bug in the platform or that they are moving away from using this an a way to allow specific domains. In either event, I recommend checking to see if you are using this and verifying if it is working as expected.

XXE DoS and .Net

External XML Entity (XXE) vulnerabilities can be more than just a risk of remote code execution (RCE), information leakage, or server side request forgery (SSRF). A denial of service (DoS) attack is commonly overlooked. However, given a mis-configured XML parser, it may be possible for an attacker to cause a denial of service attack and block your application’s resources. This would limit the ability for a user to access the expected application when needed.

In most cases, the parser can be configured to just ignore any entities, by disabling DTD parsing. As a matter of fact, many of the common parsers do this by default. If the DTD is not processed, then even the denial of service risk should be removed.

For this post, I want to talk about if DTDs are parsed and focus specifically on the denial of service aspect. One of the properties that becomes important when working with .Net and XML is the MaxCharactersFromEntities property.

The purpose of this property is to limit how long the value of an entity can be. This is important because often times in a DoS attempt, the use of expanding entities can cause a very large request with very few actual lines of data. The following is an example of what a DoS attack might look like in an entity.

<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE foo [ <!ELEMENT foo ANY > <!ENTITY dos 'dos' > <!ENTITY dos1 '&dos;&dos;&dos;&dos;&dos;&dos;&dos;&dos;&dos;&dos;&dos;&dos;' > <!ENTITY dos2 '&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;&dos1;' > <!ENTITY dos3 '&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;&dos2;' > <!ENTITY dos4 '&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;&dos3;' > <!ENTITY dos5 '&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;&dos4;' > <!ENTITY dos6 '&dos5;&dos5;&dos5;&dos5;&dos5;&dos5;&dos5;&dos5;&dos5;&dos5;&dos5;&dos5;' >]>

Notice in the above example we have multiple entities that each reference the previous one multiple times. This results in a very large string being created when dos6 is actually referenced in the XML code. This would probably not be large enough to actually cause a denial of service, but you can see how quickly this becomes a very large value.

To help protect the XML parser and the application the MaxCharactersFromEntities helps limit how large this expansion can get. Once it reaches the max amount, it will throw a System.XmlXmlException: ‘The input document has exceeded a limit set by MaxCharactersFromEntities’ exception.

The Microsoft documentation (linked above) states that the default value is 0. This means that it is undefined and there is no limit in place. Through my testing, it appears that this is true for ASP.Net Framework versions up to 4.5.1. In 4.5.2 and above, as well as .Net Core, the default value for this property is 10,000,000. This is most likely a small enough value to protect against denial of service with the XmlReader object.

The end of Request Validation

Filed under: Development, Security

One of the often overlooked features of ASP.Net applications was request validation. If you are a .Net web developer, you have probably seen this before. I have certainly covered it on multiple occasions on this site. The main goal: help reduce XSS type input from being supplied by the user. .Net Core has opted to not bring this feature along and has dropped it with no hope in sight.

Request Validation Limitations

Request validation was a nice to have.. a small extra layer of protection. It wasn’t fool proof and certainly had more limitations than originally expected. The biggest one was that it only supported the HTML context for cross-site scripting. Basically, it was trying to deter the submission of HTML tags or elements from end users. Cross-site scripting is context sensitive meaning that attribute, URL, Javascript, and CSS based contexts were not considered.

In addition, there have been identified bypasses for request validation over the years. For example, using unicode-wide characters and then storing the data ASCII format could allow bypassing the validation.

Input validation is only a part of the solution for cross-site scripting. The ultimate end-state is the use of output encoding of the data sent back to the browser. Why? Not all data is guaranteed to go through our expected inputs. Remember, we don’t trust the database.

False Sense of Security?

Some have argued that the feature did more harm than good. It created a false sense of security compared to what it could do. While I don’t completely agree with that, I have seen those examples. I have seen developers assume they were protected just because request validation was enabled. Unfortunately, this is a bad assumption based on a mis-understanding of the feature. The truth is that it is not a feature meant to stop all cross-site scripting. The goal was to create a way to provide some default input validation for a specific vulnerability. Maybe it was mis-understood. Maybe it was a great idea with impossible implementation. In either case, it was a small piece of the puzzle.

So What Now?

So moving forward with Core, request validation is out of the picture. There is nothing we can do about that from a framework perspective. Maybe we don’t have to. There may be people that create this same functionality in 3rd party packages. That may work, it may not. Now is our opportunity to make sure we understand the flaws and proper protection mechanisms. When it comes to Cross-site scripting, there are a lot of techniques we can use to reduce the risk. Obviously I rely on output encoding as the biggest and first step. There are also things like content security policy or other response headers that can help add layers of protection. I talk about a few of these options in my new course “Security Fundamentals for Application Teams“.

Remember that understanding your framework is critical in helping reduce security risks in your application. If you are making the switch to .Net core, keep in mind that not all the features you may be used to exist. Understand these changes so you don’t get bit.

Securing The .Net Cookies

Filed under: Development, Security

I remember years ago when we talked about cookie poisoning, the act of modifying cookies to get the application to act differently. An example was the classic cookie used to indicate a user’s role in the system. Often times it would contain 1 for Admin or 2 for Manager, etc. Change the cookie value and all of a sudden you were the new admin on the block. You really don’t hear the phrase cookie poisoning anymore, I guess it was too dark.

There are still security risks around the cookies that we use in our application. I want to highlight 2 key attributes that help protect the cookies for your .Net application: Secure and httpOnly.

Secure Flag

The secure flag tells the browser that the cookie should only be sent to the server if the connection is using the HTTPS protocol. Ultimately this is indicating that the cookie must be sent over an encrypted channel, rather than over HTTP which is plain text.

HttpOnly Flag

The httpOnly flag tells the browser that the cookie should only be accessed to be sent to the server with a request, not by client-side scripts like JavaScript. This attribute helps protect the cookie from being stolen through cross-site scripting flaws.

Setting The Attributes

There are multiple ways to set these attributes of a cookie. Things get a little confusing when talking about session cookies or the forms authentication cookie, but I will cover that as I go. The easiest way to set these flags for all developer created cookies is through the web.config file. The following snippet shows the httpCookies element in the web.config.

<system.web>

<authentication mode="None" />

<compilation targetframework="4.6" debug="true" />

<httpruntime targetframework="4.6" />

<httpcookies httponlycookies="true" requiressl="true" />

</system.web>

As you can see, you can set httponlycookies to true to se the httpOnly flag on all of the cookies. In addition, the requiressl setting sets the secure flag on all of the cookies with a few exceptions.

Some Exceptions

I stated earlier there are a few exceptions to the cookie configuration. The first I will discuss is the session cookie. The session cookie in ASP.Net is defaulted/hard-coded to set the httpOnly attribute. This should override any value set in the httpCookies element in the web.config. The session cookie does not default to requireSSL and setting that value in the httpCookies element as shown above should work just find for it.

The forms authentication cookie is another exception to the rules. Like the session cookie, it is hard-coded to httpOnly. The Forms element of the web.config has a requireSSL attribute that will override what is found in the httpCookies element. Simply put, if you don’t set requiressl=’true’ in the Forms element then the cookie will not have the secure flag even if requiressl=’true’ in the httpCookies element.

This is actually a good thing, even though it might not seem so yet. Here is the next thing about that Forms requireSSL setting.. When you set it, it will require that the web server is using a secure connection. Seems like common sense, but imagine a web farm where the load balancers offload SSL. In this case, while your web app uses HTTPS from client to server, in reality, the HTTPS stops at the load balancer and is then HTTP to the web server. This will throw an exception in your application.

I am not sure why Microsoft decided to make the decision to actually check this value, since the secure flag is a direction for the browser not the server. If you are in this situation you can still set the secure flag, you just need to do it a little differently. One option is to use your load balancer to set the flag when it sends any responses. Not all devices may support this so check with your vendor. The other option is to programmatically set the flag right before the response is sent to the user. The basic process is to find the cookie and just sent the .Secure property to ‘True’.

Final Thoughts

While there are other security concerns around cookies, I see the secure and httpOnly flag commonly misconfigured. While it does not seem like much, these flags go a long way to helping protect your application. ASP.Net has done some tricky configuration of how this works depending on the cookie, so hopefully this helps sort some of it out. If you have questions, please don’t hesitate to contact me. I will be putting together something a little more formal to hopefully clear this up a bit more in the near future.

ViewStateUserKey: ViewStateMac Relationship

Filed under: Development, Security, Testing

I apologize for the delay as I recently spoke about this at the SANS Pen Test Summit in Washington D.C. but haven’t had a chance to put it into a blog. While I was doing some research for my presentation on hacking ASP.Net applications I came across something very interesting that sort of blew my mind. One of my topics was ViewStateUserKey, which is a feature of .Net to help protect forms from Cross-Site Request Forgery. I have always assumed that by setting this value (it is off by default) that it put a unique key into the view state for the specific user. Viewstate is a client-side storage mechanism that the form uses to help maintain state.

I have a previous post about ViewStateUserKey and how to set it here: https://jardinesoftware.net/2013/01/07/asp-net-and-csrf/

While I was doing some testing, I found that my ViewState wasn’t different between users even though I had set the ViewStateUserKey value. Of course it was late at night.. well ok, early morning so I thought maybe I wasn’t setting it right. But I triple checked and it was right. Upon closer inspections, my view state was identical between my two users. I was really confused because as I mentioned, I thought it put a unique value into the view state to make the view state unique.

My Problem… ViewStateMAC was disabled. But wait.. what does ViewStateMAC have to do with ViewStateUserKey? That is what I said. So I started digging in with Reflector to see what was going on. What did I find? The ViewStateUserKey is actually used to modify the ViewStateMac modifier. It doesn’t store a special value in the ViewState.. rather it modifies how the MAC is generated to protect thew ViewState from Parameter Tampering.

So this does work*. If the MAC is different between users, then the ViewState is ultimately different and the attacker’s value is different from the victim’s. When the ViewState is submitted, the MAC’s won’t match which is what we want.

Unfortunately, this means we are relying again on ViewStateMAC being enabled. Don’t get me wrong, I think it should be enabled and this is yet another reason why. Without it, it doesn’t appear that the ViewStateUserKey doesn’t anything. We have been saying for the longest time that to protect against CSRF set the ViewStateUserKey. No one has said it relies on ViewStateMAC though.

To Recap.. Things that rely on ViewStateMAC:

- ViewState

- Event Validation

- ViewStateUserKey

It is important that we understand the framework features as disabling one item could cause a domino effect of other items. Be secure.

Authorization: Bad Implementation

Filed under: Development, Security, Testing

A few years ago, I joined a development team and got a chance to poke around a little bit for security issues. For a team that didn’t think much about security, it didn’t take long to identify some serious vulnerabilities. One of those issues that I saw related to authorization for privileged areas of the application. Authorization is a critical control when it comes to protecting your applications because you don’t want unauthorized users performing actions they should not be able to perform.

The application was designed using security by obscurity: that’s right, if we only display the administrator navigation panel to administrators, no one will ever find the pages. There was no authorization check performed on the page itself. If you were an administrator, the page displayed the links that you could click. If you were not an administrator, no links.

In the security community, we all know (or should know), that this is not acceptable. Unfortunately, we are still working to get all of our security knowledge into the developers’ hands. When this vulnerability was identified, the usual first argument was raised: "No hacker is going to guess the page names and paths." This is pretty common and usually because we don’t think of internal malicious users, or authorized individuals inadvertently sharing this information on forums. Lets not forget DirBuster or other file brute force tools that are available. Remember, just because you think the naming convention is clever, it very well can be found.

The group understood the issue and a developer was tasked to resolve the issue. Great.. We are getting this fixed, and it was a high priority. The problem…. There was no consultation with the application security guy (me at the time) as to the proposed solution. I don’t have all the answers, and anyone that says they do are foolish. However, it is a good idea to discuss with an application security expert when it comes to a large scale remediation to such a vulnerability and here is why.

The developer decided that adding a check to the Page_Init method to check the current user’s role was a good idea. At this point, that is a great idea. Looking deeper at the code, the developer only checked the authorization on the initial page request. In .Net, that would look something like this:

protected void Page_Init(object sender, EventArgs e) { if (!Page.IsPostBack) { //Check the user authorization on initial load if (!Context.User.IsInRole("Admin")) { Response.Redirect("Default.aspx", true); } } }

What happens if the user tricks the page into thinking it is a postback on the initial request? Depending on the system configuration, this can be pretty simple. By default, a little more difficult due to EventValidation being enabled. Unfortunately, this application didn’t use EventValidation.

There are two ways to tell the request that it is a postback:

- Include the __EVENTTARGET parameter.

- Include the __VIEWSTATE parameter.

So lets say we have an admin page that looks like the above code snippet, checking for admins and redirecting if not found. By accessing this page like so would bypass the check for admin and display the page:

http://localhost:49607/Admin.aspx?__EVENTTARGET=

This is an easy oversight to make, because it requires a thorough understanding of how the .Net framework determines postback requests. It gives us a false sense of security because it only takes one user to know these details to then determine how to bypass the check.

Lets be clear here, Although this is possible, there are a lot of factors that tie into if this may or may not work. For example, I have seen many pages that it was possible to do this, but all of the data was loaded on INITIAL page load. For example, the code may have looked like this:

protected void Page_Load(object sender, EventArgs e) { if (!Page.IsPostBack) { LoadDropDownLists(); LoadDefaultData(); } }

In this situation, you may be able to get to the page, but not do anything because the initial data needed hasn’t been loaded. In addition, EventValidation may cause a problem. This can happen because if you attempt a blank ViewState value it could catch that and throw an exception. In .Net 4.0+, even if EventValidation is disabled, ViewStateUserKey use can also block this attempt.

As a developer, it is important to understand how this feature works so we don’t make this simple mistake. It is not much more difficult to change that logic to test the users authorization on every request, rather than just on initial page load.

As a penetration tester, we should be testing this during the assessment to verify that a simple mistake like this has not been implemented and overlooked.

This is another post that shows the importance of a good security configuration for .Net and a solid understanding of how the framework actually works. In .Net 2.0+ EventValidation and ViewStateMac are enabled by default. In Visual Studio 2012, the default Web Form application template also adds an implementation of the ViewStateUserKey. Code Safe everyone.

Handling Request Validation Exceptions

Filed under: Development

I write a lot about the request validation feature built into .Net because I believe it serves a great purpose to help reduce the attack surface of a web application. Although it is possible to bypass it in certain situations, and it is very limited to HTML context cross site scripting attacks, it does provide some built in protection. Previously, I wrote about how request validation works and what it is ultimately looking for. Today I am going to focus on some different ways that request validation exceptions can be handled.

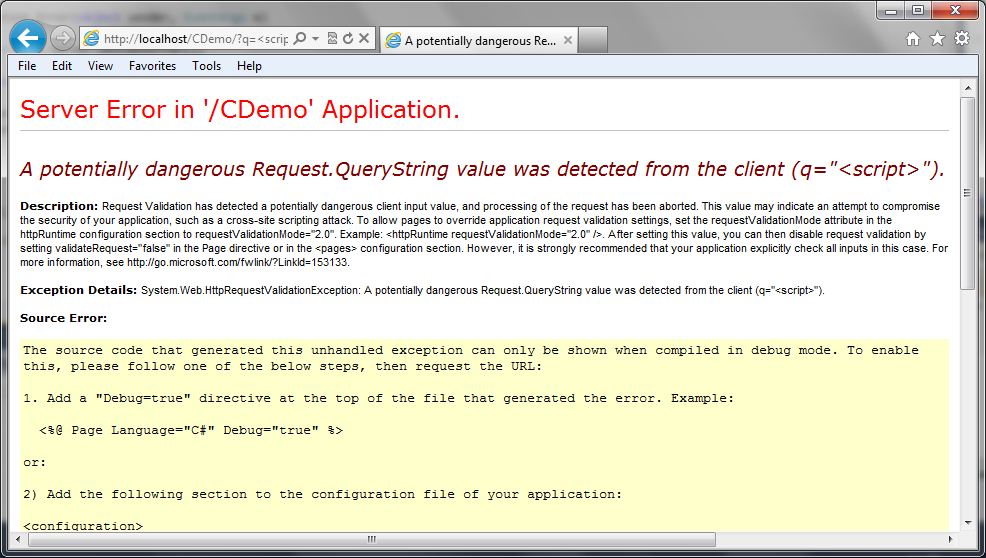

No Error Handling

How many times have you seen the “Potentially Dangerous Input” error message on an ASP.Net application? This is a sure sign to the user that the application has request validation enabled, but that the developers have not taken care to provide friendly error messages. Custom Errors, which is enabled by default, should even hide this message. So if you are seeing this, there is a good chance there are other configuration issues with the site. The following image shows a screen capture of this error message:

This is obviously not the first choice in how we want to handle this exception. For one, it gives out a lot of information about our application and it also provides a bad user experience. Users want to have error messages (no one really wants error messages) that match the look and feel of the application.

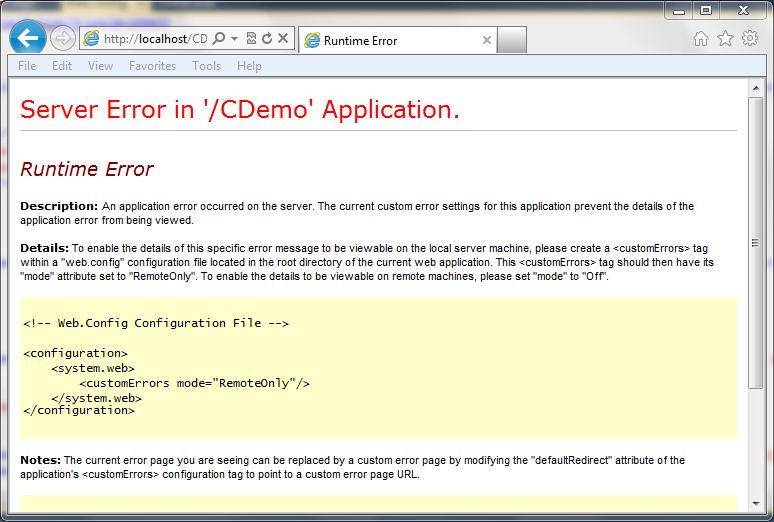

Custom Errors

ASP.Net has a feature called “Custom Errors” which is the final catch in the framework for exceptions within an ASP.Net application. If the developer hasn’t handled the exception at all, custom errors picks it up (that is, if it is enabled (default)). Just enabling customer errors is a good idea. If we just set the mode to “On” we get a less informative error message, but it still doesn’t look like the rest of the site.

The following code snippet shows what the configuration looks like in the web.config file:

<system.web>

<customErrors mode="On" />

The following screen shot shows how this looks:

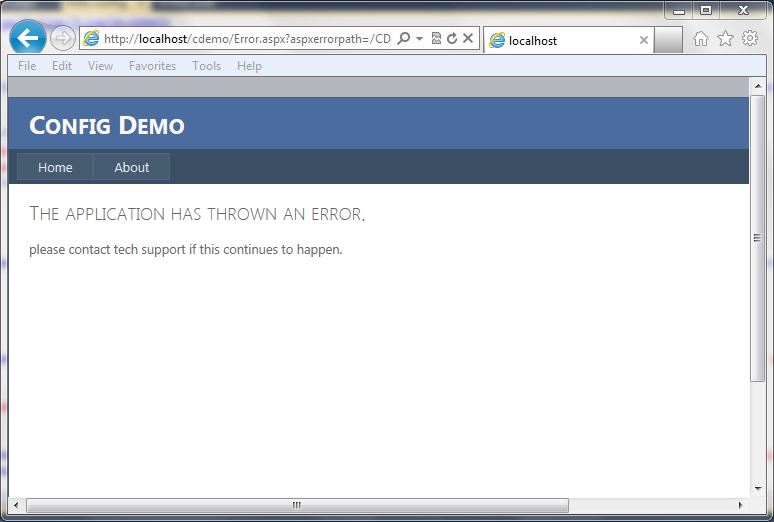

Custom Errors with Redirect

Just turning customer errors on is helpful, but it is also possible to redirect to a generic error page. This allows the errors to go to a page that contains a generic error message and can look similar to how the rest of the site looks. The following code snippet shows what the configuration looks like in the web.config file:

<system.web>

<customErrors mode="On" defaultRedirect="Error.aspx" />

The screen below shows a generic error page:

Custom Error Handling

If you want to get really specific with handling the exception, you can add some code to the global.asax file for the application error event. This allows determing the type of exception, in this case the HTTPRequestValidationException, and redirecting to a more specific error page. The code below shows a simple way to redirect to a custom error page:

void Application_Error(object sender, EventArgs e)

{

Exception ex = Server.GetLastError();

if (ex is HttpRequestValidationException)

{

Server.ClearError();

Response.Redirect("RequestValidationError.aspx", false);

}

}

Once implemented, the user will get the following screen (again, this is a custom screen for this application, you can build it however you like):

As you can see, there are many ways that we can handle this exception, and probably a few more. This is just an example of some different ways that developers can help make a better user experience. The final example screen where it lists out the characters that are triggering the exception to occur could be considered too much information, but this information is already available on the internet and previous posts. It may not be very helpful to most users in the format I have displayed it in, so make sure that the messages you provide to the users are in consideration of their understanding of technology. In most cases, just a generic error message is acceptable, but in some cases, you may want to provide some specific feedback to your users that certain data is not acceptable. For example, the error message could just state that the application does not accept html characters. At the very least, have custom errors enabled with a default error page set so that overly verbose, ugly messages are not displayed back to the user.

ASP.Net: Tampering with Event Validation – Part 2

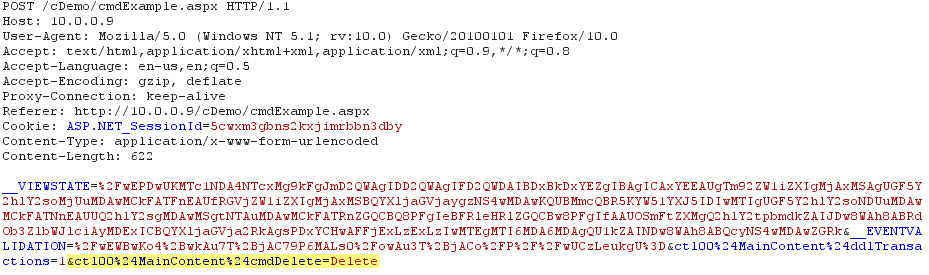

In part 1 of this series I demonstrated how to modify the values of a list box and access data I was not given access to by manipulating the view state and event validation parameters. Remember, the key to this is that ViewStateMac must be disabled. In this post, I will be demonstrating triggering button events that are not available to me.

Target Application

The target application has a simple screen that has a drop down list with some transactions and a button to view the selected transaction.

Image 1

When a user selects a transaction and clicks the “View Transaction†button, the transaction is loaded (see Image 2).

Image 2

As seen in Image 1 above, some users have access to delete the selected transaction. Image 3 shows what happens when we delete the transaction. (For this demo, the transaction is actually not deleted, a message is just displayed to make things simple.

Image 3

Unfortunately, the account I am using doesn’t allow me to delete transactions. Lets take a look at how we can potentially bypass this so we can delete a transaction we don’t want.

Tools

I will only be using Burp Suite Pro and my custom Event Validation tool for this. Other proxies can be used as well.

The Process

Lets take a look at the page as I see it (See Image 4). I will click the “View Transaction†button and view a transaction. Notice that there is no “Delete†button available.

Image 4

When I view the source of the page (Tools…View Source) I can see that the id of the “Get Transaction†button is ctl00$MainContent$cmdGetTransaction. In some cases, I may have access to the details of the delete button (that is currently invisible). In most cases, I may be stuck just making educated guesses as to what it might be. In this case I do know what it is called (ctl00$MainContent$cmdDelete), but one could easily make simple guesses like cmdDelete, cmdRemove, cmdDeleteTransaction, etc..

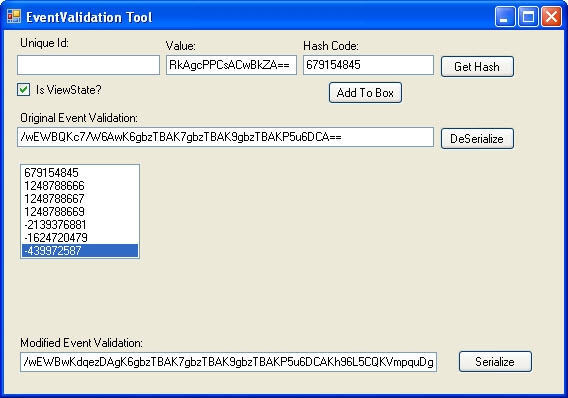

I like to avoid doing any url encoding myself, so my first step is to change my proxy into intercept mode and select a transaction to view. I want to intercept the response so that I can modify my __EVENTVALIDATION field before it goes to my page. I need to add in my button to the __EVENTVALIDATION so that ASP.Net will accept it as valid. I will use the custom Event Validation tool to do this. Image 5 shows the Event Validation application with the needed changes.

Image 5

After modifying the __EVENTVALIDATION field, I will update that in my intercepted response and then let the page continue.

Original __EVENTVALIDATION

/wEWBgKo4+wkAu7T+jAC79P6MALs0/owAu3T+jACo/P//wU=

Modified __EVENTVALIDATION

/wEWBwKo4+wkAu7T+jAC79P6MALs0/owAu3T+jACo/P//wUCzLeukgU=

Now that I have modified the data on my current page, the next step is to Click the “View Transaction†button again to trigger a request. The triggered request is shown in Image 6.

Image 6

This request is triggering a “View Transaction†request, which is what we do not want. We need to change this request so that it is calling the “Delete†command instead. You can see the change in Image 7 below.

Image 7

When I continue the request and turn my intercept off I can see that my delete button click did fire and I get the message alerting me that the item was deleted (Image 8).

Image 8

Additional Notes

Since this is using a drop down list to define the transaction we are dealing with, I could combine the concepts from Part 1 in this series to delete other transactions that may not even be mine. There are a lot of possibilities with tampering with the Event Validation and View State that can cause big problems.

Conclusion

This is an overly simplified example of how events that are meant to be hidden from users can be triggered when ViewStateMac is disabled. It is important that if you are changing the visibility of buttons or other controls that trigger events based on users roles, that the actual event checks to make sure that the current user is authorized to perform its function. I personally like all of the built in security features that .Net has, but no one should rely solely on them. Of course, there are multiple factors that go into this vulnerability, so if things are not just right, it may not work.

This information is provided as-is and is for educational purposes only. There is no claim to the accuracy of this data. Use this information at your own risk. Jardine Software is not responsible for how this data is used by other parties.

ASP.Net: Tampering with Event Validation – Part 1

Filed under: Development, Security

UPDATED 12/13/2012 – This post was updated to include a video demonstration of tampering with data with Event Validation enabled. The video is embedded at the bottom of the post.

My last post brought up the topic of tampering with Event Validation (__EVENTVALIDATION) and how it is protected with the ViewStateMAC property. This post, and the next few, will focus on how to actually tamper with the values in Event Validation to test for security vulnerabilities. We will use a very basic demo application to demonstrate this.

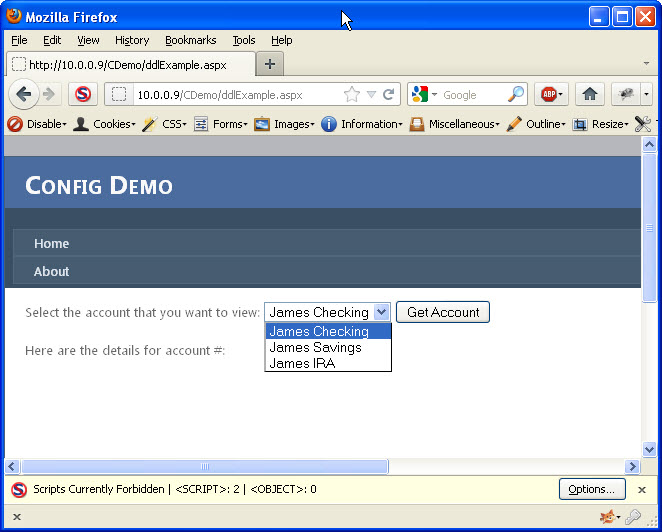

Target Application

The target application has a simple screen with a drop down list, a button, and a data grid that displays the selected account information. The drop down list is populated with accounts for the logged on user only. I will show how the data can be modified to view another account that we were not granted access to. It is important to note that there are many variables to requests and this assumes that authorization was only checked to fill the drop down list and not when an actual account is selected. This is a pretty common practice because it is assumed that event validation will only allow the authorized drop down items (the accounts in this instance) to be selected by the user. Not all applications are written in this way, and the goal is to test to see if there is authorization or parameter tampering issues.

Tools

I will use multiple tools to perform this test. These tools are personal choice and other tools could be used. The first, and my favorite, is Burp Suite Pro. This will be my main proxy, but that is just because I like how it works better than some others. Secondly, I will be using Fiddler and the ViewState Viewer plug-in to manipulate the View State field. Finally, I have a custom application that I wrote to generate the Event Validation codes that I need to make this work.

The Process

First, I will just load the application and view the target page. Image 1 shows the initial screen with the values from the drop down list. Notice that only 3 accounts are available and they are all for James. By looking at the source for the drop down list, I can see that the values for each of those items are numeric. This is a good sign, for testing purposes. Numeric values are usually easier at determining, especially if you can find a simple pattern.

Image 1

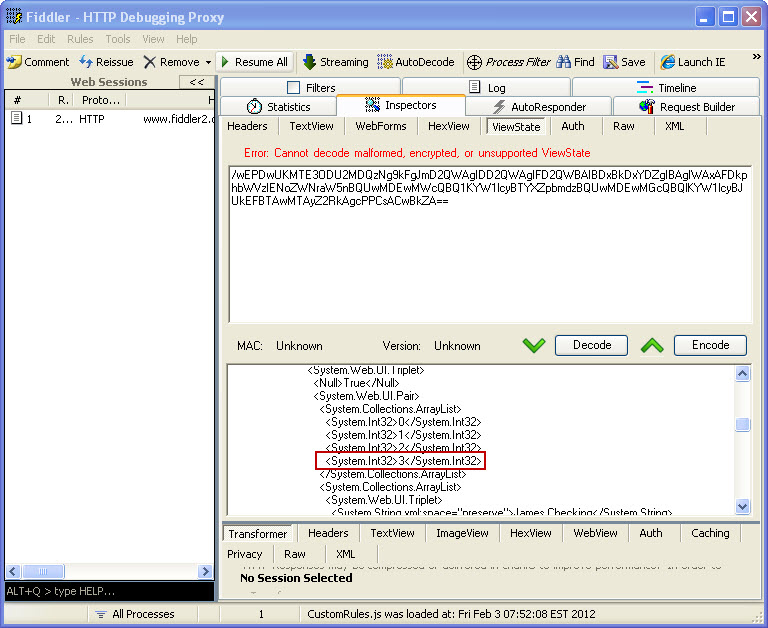

Next, it is time to de-serialize the view state so we can modify it for the value we want to add. To do this, I will use Fiddler and the ViewState Viewer plug-in. There are two changes I need to make. First, I need to add an item to the ArrayList (seen in Image 2).

Image 2

Second, I need to add the drop down list item I want to try and access. The text will not matter, but the value that I add is the key. Although this may not always be the case, in this simple example, that is what the application uses to query the account data. The value needed could be known, or you could just attempt to try different values until you find one that works. The added item can be seen here in Image 3.

Image 3

Now that my view state has modified, I can press the “Encode” button and get the updated view state. Here is the original and modified view state values:

Original __VIEWSTATE

/wEPDwUKMTE3ODU2MDQzNg9kFgJmD2QWAgIDD2QWAgIFD2QWBAIBDxBkDxYDZg

IBAgIWAxAFDkphbWVzIENoZWNraW5nBQUwMDEwMWcQBQ1KYW1lcyBTYXZpbmdz

BQUwMDEwMGcQBQlKYW1lcyBJUkEFBTAwMTAyZ2RkAgcPPCsACwBkZA==

Modified __VIEWSTATE

/wEPDwUKMTE3ODU2MDQzNg9kFgJmD2QWAgIDD2QWAgIFD2QWBAIBDxBkDxYEZg

IBAgICAxYEEAUOSmFtZXMgQ2hlY2tpbmcFBTAwMTAxZxAFDUphbWVzIFNhdmluZ3M

FBTAwMTAwZxAFCUphbWVzIElSQQUFMDAxMDJnEAUOSGFja2VkIEFjY291bnQFBTA

wMjAxZ2RkAgcPPCsACwBkZA==

The next step is to modify the Event Validation value. There are two options to modify this value:

- Use Fiddler and the ViewState Viewer (just like how we just modified the view state)

- Use my custom application. This is the method I will use for this example. See Image 4 for a reference to the application.

I will retrieve the __EVENTVALIDATION value from the response and paste it into the Original Event Validation textbox and “De-Serialize” it. This will populate the list box with all of the original hash values. To add custom data, we must use the same technique that the ASP.Net uses to calculate the hash value for the items. There are two values we need to modify in this example.

- First, I need to modify our allowed viewstate hash. To do this, I will put my modified __VIEWSTATE value into the “Value” textbox and click the “Get Hash” button. View State is a little different than other control data in that it does not require a Unique Id to be associated with it to generate the hash. The hash value generated is 679154845. This value must be placed in the first array item in event validation.

- Second, I need to add my own drop down item. Previously, I added this to the view state and used a value of 00201. Adding it to event validation is a little different. I will enter in the value (00201) into the “Value” box, and then enter the drop down lists unique id (ctl00$MainContent$ddlAccounts) into the “Unique Id:” textbox. Clicking the “Get hash” button produces the following hash: 439972587. I then add this hash value to the array.

Now that my two hashes have been added, it is time to serialize the data back into the __EVENTVALIDATION value. Here are the two different values we worked with:

Original __EVENTVALIDATION

/wEWBQKc7/W6AwK6gbzTBAK7gbzTBAK9gbzTBAKP5u6DCA==

Modified __EVENTVALIDATION

/wEWBwKdqezDAgK6gbzTBAK7gbzTBAK9gbzTBAKP5u6DCAKh96L5CQKVmpquDg==

Image 4

Due to the time it can take to perform the above steps, I like to do them without holding up my request. Now that I have the values I need, I will refresh my page with my proxy set to intercept the response. I will then modify the response and change the __VIEWSTATE and __EVENTVALIDATION values to my new modified ones. I will let the response continue to allow my page to display.

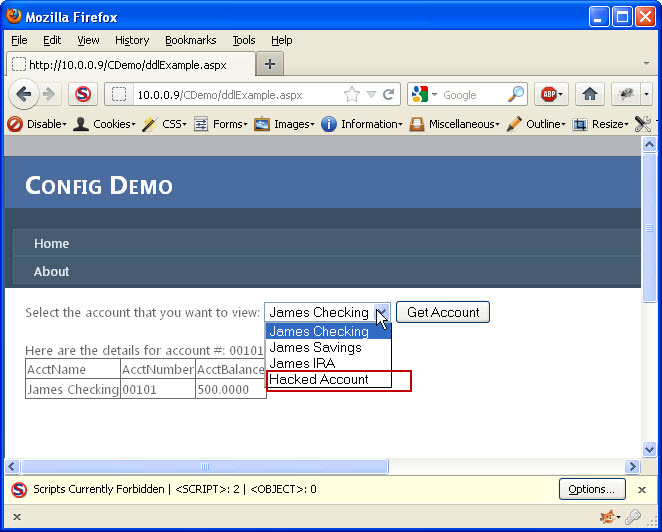

At this point, nothing appears to have happened. There is no additional drop down list item or anything else to indicate that this has worked. The key is to now just select an existing account and hit the “Get Account” button. If there is an error, something didn’t work right. If you were successful, the page should re-load and you should see a new Drop down List item (See Image 5)

Image 5

I now need to determine if I was lucky enough to pick a valid account number. I will select the “Hacked Account” list item (which is the one I added) and click the “Get Account” button. If no data comes back then the account number did not exist. It is back to the drawing board to try again with a different number. If, however, account details did show up, then I have successfully accessed data I was not given access to. Image 6 shows the results of a successful attempt.

Image 6

Conclusion

This showed the steps necessary to manipulate both view state and event validation to tamper with the allowed accounts were were authorized to see. This requires that ViewStateMAC is disabled and that the view state has not been encrypted. If either of those two factors were different, this would not be possible. This demonstrates the importance of ensuring that you use ViewStateMAC to protect your view state AND event validation. You should also be performing authorization checks on each request that requests sensitive information from your application. This example application is completely fictional and there are no claims that this represents the way that any production application actually works. It will be used to demonstrate the different techniques used to develop applications to understand how they can be fixed.

This information is provided as-is and is for educational purposes only. There is no claim to the accuracy of this data. Use this information at your own risk. Jardine Software is not responsible for how this data is used by other parties.

ASP.Net Insecure Redirect

Filed under: Development, Security

It was recently discovered that there was a vulnerability within the ASP.Net Forms Authentication process that could allow an attacker to force a user to visit a malicious web site upon success authentication. Until this vulnerability was found, it was thought that the only way to allow the Forms Authentication redirect (managed by the ReturnUrl parameter) to redirect outside of the host application’s domain was to set enableCrossAppRedirects to true in the web.config file. If this property was set to false, the default value, then redirection could only occur within the current site.

So how does it work? When a user navigates to a page that requires authentication, and they are not currently authenticated, ASP.Net redirects them to the login page. This redirection appends a querystring value onto the url that, once successful login occurs, will allow the user to be redirected to the originally requested page. Here is an example (Assuming that EnableCrossAppRedirects=”False” ):

- User requests the following page: http://www.jardinesoftware.com/useredit.aspx

- The framework recognizes that the user is not authenticated and redirects the user to http://www.jardinesoftware.com/logon.aspx?ReturnUrl=useredit.aspx

- The user enters their login information and successfully authenticates.

- The application then analyzes the ReturnUrl and attempts to determine if the Url references a location with the current domain, or if it is outside of the domain. To do this, it uses the Uri object to determine if the Url is an Absolute path or not.

- If it is determined to NOT be an absolute path, by using the Uri object, then the redirect is accepted.

- If it is determined to be an absolute path, additional checks are made to determine if the hosts match for both the current site and the redirect site. If they do, a redirect is allowed. Otherwise, the user is redirected to the default page.

The details are not fully known at this time as to what the malicious link may look like to trick ASP.Net into believing that the Url is part of the same domain, so unfortunately I don’t have an example of that. If I get one, I will be sure to post it. It does appear that some WAF’s are starting to include some signatures to try and detect this, but that should be part of defense in depth, not the sole solution. Apply the patch. The bulletin for MS11-100 describes the patch that is available to remediate this vulnerability.

It is important to note that this is an issue in the FormsAuthentication.RedirectFromLogin method and not the Response.Redirect method. If you are doing the latter, through a custom login function, you would be required to validate the ReturnUrl. If you use the default implementation to redirect a user after credentials have been verified or use the built in asp:Login controls, you will want to make sure this patch gets applied.

This issue requires that a legitimate user clicks on a link that already has a malicious return url included and then successfully logs in to the site. This could be done through some sort of phishing scheme or by posting the link somewhere where someone would click on it. It is always recommended that when users go to a site that requires authentication that they type in the address to the site, rather than follow a link someone sent them.

This issue doesn’t have any impact on the site that requires authentication, it is strictly a way for attackers to get users to visit a malicious site of their own.

For more information you can read Microsoft’s Security Bulletin here: http://technet.microsoft.com/en-us/security/bulletin/ms11-100.

The information provided in this post is provided as-is and is for educational purposes only. It is imperative that developers understand the vulnerabilities that exist within the frameworks/platforms that they work with. Although there is not much you can really do when the vulnerability is found within the framework, understanding the possible workarounds and the risks associate with them help determine proper remediation efforts.