ViewStateUserKey: ViewStateMac Relationship

Filed under: Development, Security, Testing

I apologize for the delay as I recently spoke about this at the SANS Pen Test Summit in Washington D.C. but haven’t had a chance to put it into a blog. While I was doing some research for my presentation on hacking ASP.Net applications I came across something very interesting that sort of blew my mind. One of my topics was ViewStateUserKey, which is a feature of .Net to help protect forms from Cross-Site Request Forgery. I have always assumed that by setting this value (it is off by default) that it put a unique key into the view state for the specific user. Viewstate is a client-side storage mechanism that the form uses to help maintain state.

I have a previous post about ViewStateUserKey and how to set it here: https://jardinesoftware.net/2013/01/07/asp-net-and-csrf/

While I was doing some testing, I found that my ViewState wasn’t different between users even though I had set the ViewStateUserKey value. Of course it was late at night.. well ok, early morning so I thought maybe I wasn’t setting it right. But I triple checked and it was right. Upon closer inspections, my view state was identical between my two users. I was really confused because as I mentioned, I thought it put a unique value into the view state to make the view state unique.

My Problem… ViewStateMAC was disabled. But wait.. what does ViewStateMAC have to do with ViewStateUserKey? That is what I said. So I started digging in with Reflector to see what was going on. What did I find? The ViewStateUserKey is actually used to modify the ViewStateMac modifier. It doesn’t store a special value in the ViewState.. rather it modifies how the MAC is generated to protect thew ViewState from Parameter Tampering.

So this does work*. If the MAC is different between users, then the ViewState is ultimately different and the attacker’s value is different from the victim’s. When the ViewState is submitted, the MAC’s won’t match which is what we want.

Unfortunately, this means we are relying again on ViewStateMAC being enabled. Don’t get me wrong, I think it should be enabled and this is yet another reason why. Without it, it doesn’t appear that the ViewStateUserKey doesn’t anything. We have been saying for the longest time that to protect against CSRF set the ViewStateUserKey. No one has said it relies on ViewStateMAC though.

To Recap.. Things that rely on ViewStateMAC:

- ViewState

- Event Validation

- ViewStateUserKey

It is important that we understand the framework features as disabling one item could cause a domino effect of other items. Be secure.

Brute Force: An Inside Job

Filed under: Development, Security, Testing

As a developer, we are told all the time to protect against brute force attacks on the login screen by using a mechanism like account lockouts. We even see this on our operating systems, when we attempt multiple incorrect logins, we get locked out. Of course, as times have changed, so have some of the mitigations. For example, some systems are implementing a Captcha or some other means to slow down automated brute force attacks. Of course, the logistics are different on every system, how many attempts until lockout, or is there an auto-unlock feature, but that is outside the scope of this post.

As a penetration tester, brute force attacks are something I test for on every application. Obviously, I test the login forms for this type of security flaw. There are also other areas of an application that brute force attacks can be effective. The one I want to discuss in this post is related to functionality inside the authenticated section of the application. More specifically, the change password screen. Done right, the change password screen will require the current password. This helps protect from a lot of different attacks. For example, if an attacker hijacks a user’s valid session, he wouldn’t be able to just change the user’s password. It also helps protect against cross-site request forgery for changing a user’s password. I know, I know, it can be argued with cross-site scripting available, cross-site request forgery can still be performed, but that gets a little more advanced.

What about brute forcing the change password screen? I rarely see during an assessment where the developers, or business has thought about this attack vector. In just about every case, I, the penetration tester, can attempt password changes with a bad password as much as I want, followed by a valid current password to change the password. What if an attacker hijacks my session? What if someone sits down at my desk while I have stepped away and I didn’t lock my computer (look around your office and see how many people lock their computer when they walk away)?

It is open season for an attacker to attempt a brute force attack to change the user’s password. You may wonder why we need to change the password if we have accessed the account. The short answer is persistence. I want to be able to access the account whenever I want. Maybe I don’t want the user to be able to get back in and fully take over the account.

So how do we address this? We don’t want to lock the account do we? My opinion is no, we don’t want to lock the account. Rather, lets just end the current session in this type of event. If the application can detect that the user is not able to type the correct current password three or five times in a given time frame (sounds familiar to account lockout procedures) then just end the current session. The valid user should know their password and not be mis-typing it a bunch of times. An invalid user should rightfully so get booted out of the system. If the valid user’s session ends, they just log back in, however the attacker shouldn’t be able to just log back in and may have to hijack the session again which may be difficult.

Just like other brute forcible features,there are other mitigations. A Captcha could also be used to slow down automation. The key differentiator here is that we are not locking the account, but possibly just ending the session. This will have less impact on technical support as the valid user can just log back in. Of course, don’t forget you should be logging these failed attempts and auditing them to detect this attack happening.

This type of security flaw is common, not because of lazy development, but because this is fairly unknown in the development world. Rarely are we thinking about this type of issue within the application, but we need to start. Just like implementing lockouts on the login screens, there is a need to protect that functionality on the inside.

Developers, Security, Business – Lets All Work Together

Filed under: Development, Security

A few years ago, my neighbors ran into an issue with each other. Unfortunately for one neighbor, the other neighbor was on the board for the HOA. The first neighbor decided to put up a fence, got the proper approvals and started work on it. They were building the fence them selves and it took a while to complete. The other neighbor, on the board of the HOA, noticed that the fence was being built out of compliance. Rather than stopping by and letting the first neighbor know about this, they decided it would be better to let them complete the fence and then issue a citation about the fence being out of compliance, requiring them to rebuild it. At times, I feel like I see the same thing in application security which leads into my post below.

There have been lots of great blogs lately talking about application security and questioning who’s responsibility the security of applications is (Matt Neely – Who is Responsible for Application Security?) and why we are still not producing secure applications (Rafal Los – Software Security – Why aren’t the enterprise developers listening?). I feel a little late to the party, even though I have been thinking about this post for over a week. At least I am not the only one thinking about this topic as the new year starts off.

There has been a great divide between security personnel and the development teams. This has been no secret. They don’t hang out at the same conferences and honestly, it looks similar to a high school dance where, in this case, the security people are on one side of the gym and the developers are on the other. Add in the chaperones (the business leadership) enforcing space between the two groups. We need to find a way to fix this and work together.

The first thing we have to do is realize that security is everyone’s responsibility. This is because building applications involves so many more people than just some developers. Is SQL Injection the outcome of insecure coding, most definitely yes it is. However, that is why development teams have layers. There are those writing code, but there are also testers involved with verifying that the developer didn’t miss something. People make mistakes, and that is why we put a second and third pair of eyes on things. If developers were perfect and did everything right the first time, would there even be a need for QA? QA is a big part to this puzzle, lets get them involved.

I don’t know if anyone else feels this, but I feel as though there is this condescending tone that comes from both sides. Developers don’t think they need security to come in and test and security has this notion that because they found a vulnerability that “I win” and “you lose.” We start security presentations off with “developers suck” or “we are going to pick on you today”, but why does it have to be that way. Constructive criticism is one thing, but lets be somewhat civil about it. Who wants to go the extra mile to fix applications when it comes across as a put down to the developers. This isn’t boot camp where we need to destroy all morale and then build them up the way we want.

I challenge the security guys to think about this when they are getting ready to criticize the developers. What if the situation were reversed, and you were required to find EVERY security issue in an application? Would you feel any pressure? How would you feel if you missed just one simple thing because you were under a time crunch to get the assessment done? I know, the standard response is that is why you chose the security field, so you didn’t have that requirement. I accept that response, but just want to encourage you to think about it when working with the teams that are fixing these issues. Fortunately, security practitioners don’t have this pressure, they just have to find something and throw a disclaimer that they don’t verify that the app doesn’t have other issues that were not identified during the test. Not everyone can choose to be in security, if they did, we wouldn’t have anyone developing. Don’t tell anyone… but if no one develops, there isn’t a whole lot left to manage security on. Interesting circle of life we have here.

This is not all about attitude coming from security. It goes both ways, and many times it can be worse from the dev side. I can’t tell you how many times I have seen it where the developers are upset as soon as they hear that their application is going through a penetration test. People mumbling about how their app is secure and how they don’t need the test. Unfortunately, there are many reasons why applications need to be tested by a third party. It is a part of development, get used to it. The good news is that these assessments are beneficial in multiple ways. First, you get another pair of eyes reviewing your app for stuff probably no one else on your team is thinking of. Second, you are given a chance to learn something new from the results of the test. Take the time to understand what the results are and incorporate the education into your programming. There are positives that come out of this.

Developers also need to start taking responsibility to understand and practice secure coding skills. This is paramount because so many vulnerabilities are due to just insecure coding. This involves much more than just the developers though. As I mentioned in a previous post, we need to do a better job of making this information available to the developers. Writing a book or tutorial, include secure coding examples, rather than short examples that are insecure. Making easier resources for developers to find when looking for secure coding principles. Many times, it is difficult to find a good example of how to do something because everyone is afraid if they give you something and it later becomes vulnerable they will be liable (blog post for another lifetime). Even intro to programming courses should demonstrate how to do secure programming. How difficult is it for an intro course to show using parameterized queries instead of dynamic queries? Same function, however one is secure. Why even show dynamic queries, that should be under the advanced section. Lets start pushing this from the start.

Eoin Keary recently posted XSS = SQLI = CMDi=? talking about the terminology used in security and how we look at the same type of vulnerability in so many different ways. I couldn’t agree more with what he wrote and I talk about this in the SANS Dev544: Secure Coding in .Net course. I can’t count how many times I point out that the resolution for so many of these different vulnerabilities is the same. Encode your output when sending to a different system. I understand that everyone wants to be the first to discover a new class of vulnerability, but we do need to start getting realistic when we work with the “builders” as Eoin refers to them as to what the vulnerabilities really are. If as a developer I don’t have 24 vulnerabilities I have to learn and understand, but realize that is really just 5 vulnerabilities, that makes it easier to start protecting against them. We need to get better at communicating what the problem is without over complicating it. I understand that the risk may be different for SQL Injection or Cross Site Scripting, but to a developer they are encoding untrusted data used for output in another system.

I think this year is off to a good start with all the conversations being held. There are some really smart people starting to think harder about how we can solve the problem. As you can hopefully see from the large amount of ranting above, this is not any one groups fault for this not working. There is contention from both sides. And don’t get me wrong, there are a lot of folks from both sides that are doing things right. Developers that are happy to see a pen test and security folks supporting the development side. We need to stay positive with each other and each think about how we interact with each other. We all have one goal and that is to improve the quality and security of our systems. Lets not lose focus of that.

ASP.Net: Tampering with Event Validation – Part 2

In part 1 of this series I demonstrated how to modify the values of a list box and access data I was not given access to by manipulating the view state and event validation parameters. Remember, the key to this is that ViewStateMac must be disabled. In this post, I will be demonstrating triggering button events that are not available to me.

Target Application

The target application has a simple screen that has a drop down list with some transactions and a button to view the selected transaction.

Image 1

When a user selects a transaction and clicks the “View Transaction†button, the transaction is loaded (see Image 2).

Image 2

As seen in Image 1 above, some users have access to delete the selected transaction. Image 3 shows what happens when we delete the transaction. (For this demo, the transaction is actually not deleted, a message is just displayed to make things simple.

Image 3

Unfortunately, the account I am using doesn’t allow me to delete transactions. Lets take a look at how we can potentially bypass this so we can delete a transaction we don’t want.

Tools

I will only be using Burp Suite Pro and my custom Event Validation tool for this. Other proxies can be used as well.

The Process

Lets take a look at the page as I see it (See Image 4). I will click the “View Transaction†button and view a transaction. Notice that there is no “Delete†button available.

Image 4

When I view the source of the page (Tools…View Source) I can see that the id of the “Get Transaction†button is ctl00$MainContent$cmdGetTransaction. In some cases, I may have access to the details of the delete button (that is currently invisible). In most cases, I may be stuck just making educated guesses as to what it might be. In this case I do know what it is called (ctl00$MainContent$cmdDelete), but one could easily make simple guesses like cmdDelete, cmdRemove, cmdDeleteTransaction, etc..

I like to avoid doing any url encoding myself, so my first step is to change my proxy into intercept mode and select a transaction to view. I want to intercept the response so that I can modify my __EVENTVALIDATION field before it goes to my page. I need to add in my button to the __EVENTVALIDATION so that ASP.Net will accept it as valid. I will use the custom Event Validation tool to do this. Image 5 shows the Event Validation application with the needed changes.

Image 5

After modifying the __EVENTVALIDATION field, I will update that in my intercepted response and then let the page continue.

Original __EVENTVALIDATION

/wEWBgKo4+wkAu7T+jAC79P6MALs0/owAu3T+jACo/P//wU=

Modified __EVENTVALIDATION

/wEWBwKo4+wkAu7T+jAC79P6MALs0/owAu3T+jACo/P//wUCzLeukgU=

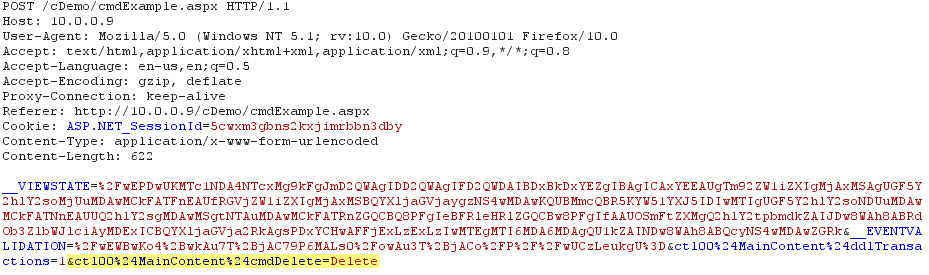

Now that I have modified the data on my current page, the next step is to Click the “View Transaction†button again to trigger a request. The triggered request is shown in Image 6.

Image 6

This request is triggering a “View Transaction†request, which is what we do not want. We need to change this request so that it is calling the “Delete†command instead. You can see the change in Image 7 below.

Image 7

When I continue the request and turn my intercept off I can see that my delete button click did fire and I get the message alerting me that the item was deleted (Image 8).

Image 8

Additional Notes

Since this is using a drop down list to define the transaction we are dealing with, I could combine the concepts from Part 1 in this series to delete other transactions that may not even be mine. There are a lot of possibilities with tampering with the Event Validation and View State that can cause big problems.

Conclusion

This is an overly simplified example of how events that are meant to be hidden from users can be triggered when ViewStateMac is disabled. It is important that if you are changing the visibility of buttons or other controls that trigger events based on users roles, that the actual event checks to make sure that the current user is authorized to perform its function. I personally like all of the built in security features that .Net has, but no one should rely solely on them. Of course, there are multiple factors that go into this vulnerability, so if things are not just right, it may not work.

This information is provided as-is and is for educational purposes only. There is no claim to the accuracy of this data. Use this information at your own risk. Jardine Software is not responsible for how this data is used by other parties.

ASP.Net Webforms CSRF Workflow

Filed under: Security, Testing

An important aspect of application security is the ability to verify whether or not vulnerabilities exist in the target application. This task is usually outsourced to a company that specializes in penetration testing or vulnerability assessments. Even if the task is performed internally, it is important that the testers have as much knowledge about vulnerabilities as possible. It is often said that a pen test is just testing the tester’s capabilities. In many ways that is true. Every tester is different, each having different techniques, skills, and strengths. Companies rely on these tests to assess the risk the application poses to the company.

In an effort to help add knowledge to the testers, I have put together a workflow to aid in testing for Cross Site Request Forgery (CSRF) vulnerabilities. This can also be used by developers to determine if, by their settings, their application may be vulnerable. This does not cover every possible configuration, but focuses on the most common. The workflow can be found here: CSRF Workflow. I have also included the full link below.

Full Link: http://www.jardinesoftware.com/Documents/ASP_Net_Web_Forms_CSRF_Workflow.pdf

Happy Testing!!

The information is provided as-is and is for educational purposes only. Jardine Software is not liable or responsible for inappropriate use of this information.